Chapter 5 More on thematic maps

In this session we are going to discuss some additional features around thematic maps we did not cover in week 3. We are going to discuss how to address some of the problems we confront when we are trying to use use choropleth maps, as well as some alternatives to point based maps. We will also introduce the modifiable area unit problem.

Before we do any of this, we need to load the libraries we will use today:

cartogramDClusterdplyrgeogridggplot2ggspatialhexbinjanitorsfspspdeptmapreadxlreadrgeodaData

Some of the above are new packages we will use for the first time this week. Remember, if you don’t already have these you will need to install them.

5.1 Pro-tip: do I need to install this package?

You might have noticed that in your list of available packages you might see more than you remember downloading. The idea of dependencies has come up throughout the semester. Packages have dependencies when their code is dependent on (uses code from) another package. For example, if we write some code that we think will be useful, so we release this in the form of the package “manchesterR”, but we use ggplot2 in the code, then ggplot2 will be a dependency of manchesterR. As a default, R will install all the dependencies for a package when we install our package, manchesterR. So this way we might end up with some packages there that we didn’t realise we had.

Why are we telling you this? Well you should always check if you have a package, before installing it. And I wanted to share with you some neat code from a Stackoverflow discussion (if you are not yet familiar with Stackoverflow you have not been Google-ing your error messages enough) here to do this. We’ll comment it a bit, so you can follow along what it does but you don’t have to if you don’t want to. This is just an extra.

So as a first step, you have to assign a list of all the packages you have to check to an object. Let’s say I tell you that today we will be using the following packaes: “sp”, “classInt”, “RColorBrewer”, “ggplot2”, “hexbin”, “ggmap”, “XML”, and “dplyr”. Then you can add these to an object called libs, using the c() function:

Now you can run the below bit of code, and you will see in the console an output of what is and isn’t installed, as well as install the packages that are not!

for (x in libs) {

# Check if the package is installed

if (!(x %in% rownames(installed.packages()))) {

# If not installed, print a message and install the package

cat("Installing ", x, "...\n")

install.packages(x)

} else {

# If installed, print a message

cat(x, "is already installed.\n")

}

# Load the package

library(x, character.only = TRUE)

}As you can see if you read through the comments there, this bit of code checks each package in the list you pass to tbe libs object when you create it, and if it is not installed it installs for you, and if it is, it just loads it for you. It can be a handy bit of code to keep around.

We also want to introduce pacmac, developed by Dason Kurkiewicz, to make your life a lot easier in the realm of R packages. While the library() function does its job, but could be the tedious task, installing and loading each package one by one. The p_load() function, a single line code will automatically checks for missing missing packages and installs/loads all libraries into you R. So, try!

5.2 Smoothing rates: adjusting for small sample noise

In week 3 we discussed how to map rates. It seems a fairly straightforward issue: you calculate a rate by dividing your numerator (e.g., number of crimes) by an appropriately selected denominator (e.g., daytime population). You get your variable with the relevant rate and you map it using a choropleth map. However, things are not always that simple.

Rates are funny animals. Gelman and Price (1999) go so far as to suggest that all maps of rates are misleading. The problem at hand is well known in spatial epidemiology: “plotting observed rates can have serious drawbacks when sample sizes vary by area, since very high (and low) observed rates are found disproportionately in poorly-sampled areas” (Gelman and Price 1999, 3221). There is associated noise for those areas where the denominators give us a small sample size. And it is hard to solve this problem.

Let’s illustrate with an example. We are going to use historic data on homicide across US counties. The dataset was used as the basis for the study by Baller et al. (2001). It contains data on homicide counts and rates for various decades across the US, as well as information on structural factors often thought to be associated with violence. The data is freely available through the webpage of Geoda, a clever point-and-click interface developed by Luc Anselin (a spatial econometrician and coauthor of the above paper) and his team, to make spatial analysis accessible. It is also available as one of the datasets in the geodaData package.

To read data available in a library we have loaded, we can use the data() function. If we check the class() of this object, we will see it was already stored in geodaData as a sf object.

## [1] "sf" "data.frame"Let’s look at the “ncvor” data. We can start by looking at the homicide rate for 1960.

## Min. 1st Qu. Median Mean 3rd Qu. Max.

## 0.000 0.000 2.783 4.504 6.885 92.937We can see that the county with the highest homicide rate in the 1960s had a rate of 92.936803 homicides per 100,000 individuals. That is very high. Just to put it into context in the UK the homicide rate is about 0.92 per 100,000 individuals. Where is that place? I can tell you it is a place called Borden. Check it out:

## old-style crs object detected; please recreate object with a recent sf::st_crs()## [1] 92.9368Borden county is in Texas. You may be thinking “Texas Chainsaw Massacre” perhaps? No, not really. Ed Gein, who inspired the film, was based and operated in Wisconsin. Borden’s claim to fame is rather more prosaic: it was named after Gail Borden, the inventor of condensed milk. So, what’s going on here? Why do we have a homicide rate in Borden that makes it look like a war zone? Is it that it is only one of the six counties where alcohol is banned in Texas?

To get to the bottom of this, we can look at the variable HC60 which tells us about the homicide count in Borden:

## [1] 1What? A total homicide count of 1. How can a county with just one homicide have a rate that makes it look like the most dangerous place in the US? To answer this, let’s look at the population of Borden county in the 1960s, contained in the PO60 variable.

## [1] 1076Well, there were about 1076 people living there. It is among some of the least populous counties in our data:

## Min. 1st Qu. Median Mean 3rd Qu. Max.

## 208 9417 18408 57845 39165 7781984If you contrast that population count with the population of the average county in the US, that’s tiny. One homicide in such a small place can end up producing a big rate. Remember that the rate is simply dividing the number of relevant events by the exposure variable (in this case, population) and multiplying by a constant (in this case, 100,000 since we expressed crime rates in those terms). Most times Borden looks like a very peaceful place:

## [1] 0## [1] 0## [1] 0It has a homicide rate of 0 in most decades. But it only takes one homicide and, bang, it goes top of the league. So a standard map of rates is bound to be noisy. There is the instability that is introduced by virtue of having areas that may be sparsely populated and in which one single event, like in this case, will produce a very noticeable change in the rate.

In fact, if you look at the counties with the highest homicide rate in the “ncovr” dataset, you will notice all of them are places like Borden, areas that are sparsely populated, not because they are that dangerous, but because of the instability of rates. Conversely, the same happens with those places with the lowest rate. They tend to be areas with a very small sample size.

This is a problem that was first noted by epidemiologists doing disease mapping. But a number of other disciplines have now noted this and used some of the approaches developed by public health researchers that confronted this problem when producing maps of disease (aside: techniques and approaches used by spatial epidemiologists are very similar to those used by criminologists, in case you ever think of changing careers or need inspiration for how to solve a crime analysis problem).

One way of dealing with this is by smoothing or shrinking the rates. This basically, as the word implies, aims for a smoother representation that avoids hard spikes associated with random noise. There are different ways of doing that. Some ways use a non-spatial approach to smoothing, using something called a empirical Bayesian smoother. How does this work? This approach takes the raw rates and tries to “shrink” them towards the overall average. What does this mean? Essentially, we compute a weighted average between the raw rate for each area and the global average across all areas, with weights proportional to the underlying population at risk. What this procedure does is adjusting considerably (brought closer to the global average) the rates of smaller areas (those with a small population at risk), whereas the rates for the larger areas will barely change.

Here we are going to introduce the approach implemented in DCluster, a package developed for epidemiological research and detection of clusters of disease. Specifically, we can implement the empbaysmooth() function which creates a smooth relative risks from a set of expected and observed number of cases using a Poisson-Gamma model as proposed by Clayton and Kaldor (1987). The function empbaysmooth() expects two parameters, the expected value and the observed value. Let’s define them first.

#First we define the observed number of cases

ncovr$observed <- ncovr$HC60

#To compute the expected number of cases through indirect standardisation

#we need the overall incidence ratio

overall_incidence_ratio <- sum(ncovr$HC60)/sum(ncovr$PO60)

#The expected number of cases can then be obtained by multiplying the overall

#incidence rate by the population

ncovr$expected <- ncovr$PO60 * overall_incidence_ratioWith this parameters we can obtain the raw relative risk:

## Min. 1st Qu. Median Mean 3rd Qu. Max.

## 0.0000 0.0000 0.6143 0.9940 1.5195 20.5105And then estimate the smoothed relative risk:

In the new object we generated, which is a list, you have an element which contains the computed rates. We can add those to our dataset:

## Min. 1st Qu. Median Mean 3rd Qu. Max.

## 0.2336 0.8699 0.9365 0.9675 1.0347 2.7869We can observe that the dispersion narrows significantly and that there are fewer observations with extreme values once we use this smoother.

Instead of shrinking to the global relative risk, we can shrink to a relative rate based on the neighbours of each county. Shrinking to the global risk ignores the spatial dimension of the phenomenon being mapped out and may mask existing heterogeneity. If instead of shrinking to a global risk, we shrink to a local rate, we may be able to take unobserved heterogeneity into account. Marshall (1991) proposed a local smoother estimator in which the crude rate is shrunk towards a local, “neighbourhood”, rate. To compute this, we need the list of neighbours that surround each county (we will discuss this code in Chapter 11, so for now just trust we are computing the rate of the areas that surround each country):

ncovr_sp <- as(ncovr, "Spatial")

w_nb <- poly2nb(ncovr, row.names=ncovr_sp$FIPSNO)

eb2 <- EBlocal(ncovr$HC60, ncovr$PO60, w_nb)

ncovr$HR60EBSL <- eb2$est * 100000We can now plot the maps and compare them:

tmap_mode("plot")

map1 <- tm_shape(ncovr) +

tm_fill(

"HR60",

fill.scale = tm_scale_intervals(style="quantile", values = "brewer.reds"),

fill.legend = tm_legend(

title = "Raw rate",

position = c("left", "bottom"),

title.size = 0.8,

text.size = 0.5

)

) +

tm_title("Raw rate")

map2 <- tm_shape(ncovr) +

tm_fill(

"HR60EBSL",

fill.scale = tm_scale_intervals(style = "quantile", values = "brewer.reds"),

fill.legend = tm_legend(

title = "Local Smooth",

position = c("left", "bottom"),

title.size = 0.8,

text.size = 0.5

)

) +

tm_title("Local Smooth")

tmap_arrange(map1, map2)

Figure 5.1: Compare raw and smoothed rates across counties in the USA

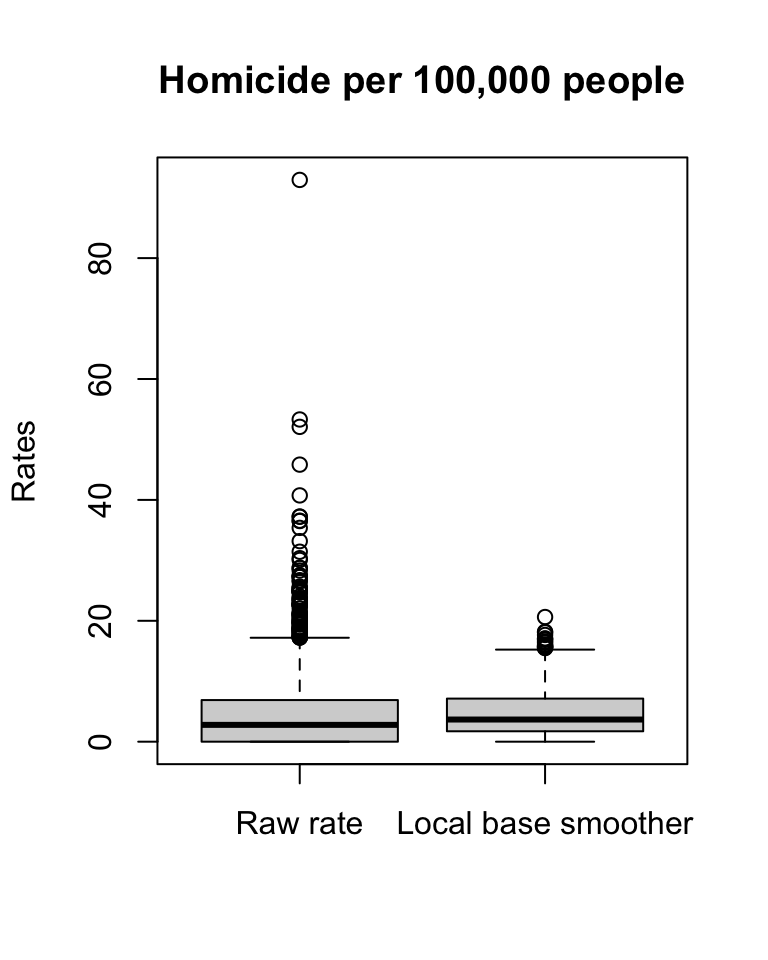

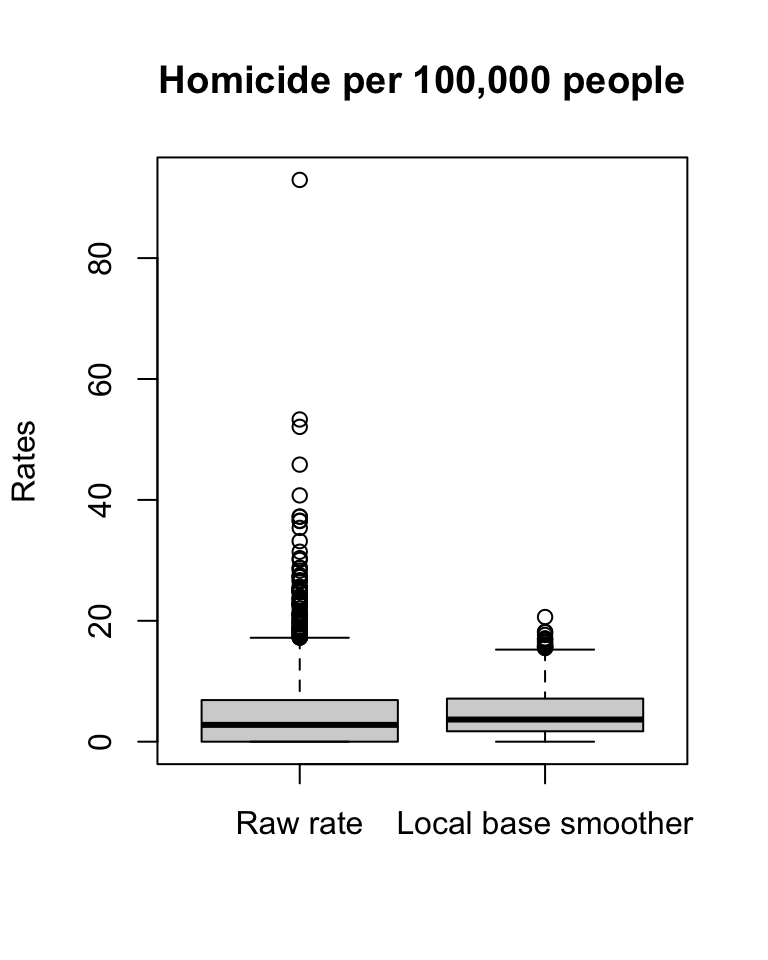

Notice that the quantiles are not the same, so that will make your comparison difficult. Let’s look at a boxplot of these variables. In the map of raw rates, we have the most variation.

#Boxplots with base R graphics

boxplot(ncovr$HR60, ncovr$HR60EBSL,

main = "Homicide per 100,000 people",

names = c("Raw rate",

"Local base smoother"),

ylab = "Rates")

Figure 5.2: Boxplots to compare variation in rates

The range for the raw rates is nearly 93. Much of the variation in observed homicide rates by county is attributable to statistical noise due to the small number of (observed and expected) homicides in low-population counties. Because of this noise, a disproportionate fraction of low-population counties are observed to have extremely high (or low) homicide rates when compared to typical counties in the United States. With smoothing, we reduce this problem, and if you contrast the maps you will see how this results in a clearer and smoother spatial pattern for the rate that is estimated borrowing information from their neighbours.

So to smooth or not too smooth? Clearly we can see how smoothing stabilises the rates and removes noise. But as Gelman and Price (1999) suggests this introduces other artifacts and autocorrelation into our estimates. Some people are also not too keen on maps of statistically adjusted estimates. Yet, the conclusions one can derive from mapping raw rates (when the denominator varies significantly and we have areas with small sample size) means that smoothing is often a preferable alternative (Waller and Gotway 2004). The problem we have with maps of estimates is that we need information about the variability and it is hard to map this out in a convenient way (Gelman and Price 1999). Lawson (2021), in relation to the similar problem of disease mapping, suggests that “at the minimum any map of relative risk for a disease should be accompanied with information pertaining to estimates of rates within each region as well as estimates of variability within each region” (p. 38), whereas “at the other extreme it could be recommended that such maps be only used as a presentational aid, and not as a fundamental decision-making tool” (p. 38).

5.3 Binning points

We’re going to move away from area-level data now, and go back to point-level data.

In GIS it is often difficult to present point-based data because in many instances there are several different points and data symbologies that need to be shown. As the number of different data points grows they can become complicated to interpret and manage which can result in convoluted and sometimes inaccurate maps. This becomes an even larger problem in web maps that are able to be depicted at different scales because smaller scale maps need to show more area and more data. This makes the maps convoluted if multiple data points are included.

In many maps there are so many data points included that little can be interpreted from them. In order to reduce congestion on maps many GIS users and cartographers have turned to a process known as binning.

Binning is defined as the process of grouping pairs of locations based on their distance from one another. These points can then be grouped as categories to make less complex and more meaningful maps.

Researchers and practitioners often require a way to systematically divide a region into equal-sized portions. As well as making maps with many points easier to read, binning data into regions can help identify spatial influence of neighbourhoods, and can be an essential step in developing systematic sampling designs.

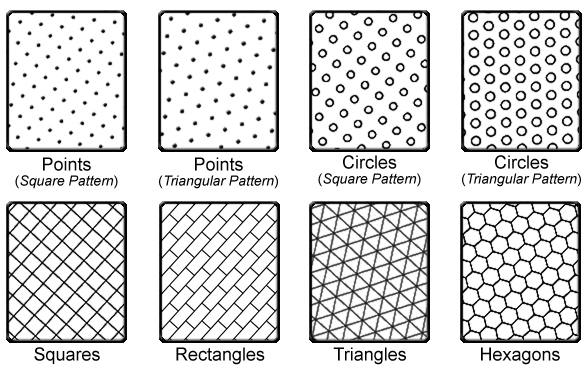

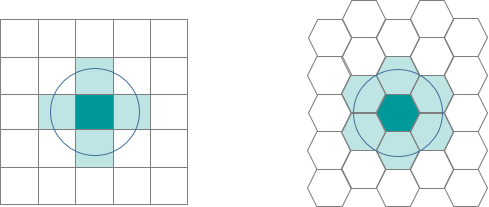

This approach to binning generates an array of repeating shapes over a user-specified area. These shapes can be hexagons, squares, rectangles, triangles, circles or points, and they can be generated with any directional orientation.

5.3.1 The Binning Process

Binning is a data modification technique that changes the way data is shown at small scales. It is done in the pre-processing stage of data analysis to convert the original data values into a range of small intervals, known as a bin. These bins are then replaced by a value that is representative of the interval to reduce the number of data points.

Spatial binning (also called spatial discretization) discretizes the location values into a small number of groups associated with geographical areas or shapes. The assignment of a location to a group can be done by any of the following methods: - Using the coordinates of the point to identify which “bin” it belongs to. - Using a common variable in the attribute table of the bin and the point layers.

5.3.2 Different Binning Techniques

Binning itself is a general term used to describe the grouping of a dataset’s values into smaller groups (Johnson, 2011). The bins can be based on a variety of factors and attributes such as spatial and temporal and can thus be used for many different projects.

5.3.2.1 Choropleth maps

You might be thinking, “grouping points into a larger spatial unit, haven’t we already done this when making choropleth maps?”. In a way you are right. Choropleth maps are another type of map to that uses binning. Proportional symbol and choropleth maps group similar data points together to show a range of data instead of many individual points. We’ve covered this extensively, and is generally the best approch to consider spatial grouping of your point variables, because the polygons (shapes) to which you are aggregating your points are meaningful. You can group into LSOAs because you want to show variation in neighbourhoods. Or you can group into police force areas because you want to look at differences between those units of analysis. But sometimes there is just not a geography present to meet your needs.

Let’s say you are conducting some days of action in Manchester city centre, focusing on antisocial behaviour. You are going to put up some information booths and staff them with officers to engage with the local population about antisocial behaviour. For these to be most effective, as an analyst you decide that they should go into the areas with the highest count of antisocial behaviour. You want to be very specific about where you put these as well, and so LSOA level would be too broad, you want to zoom in more. One approach can be to split central Manchester into some smaller polygons, and just calculate the number of antisocial behaviour incidents recorded in each. That way you can then decide to put your information booths somewhere inside the top 5 highest count bins.

5.3.2.2 Rectangular binning

The aggregation of incident point data to regularly shaped grids is used for many reasons such as normalizing geography for mapping or to mitigate the issues of using irregularly shaped polygons created arbitrarily (such as county boundaries or block groups that have been created from a political process). Regularly shaped grids can only be comprised of equilateral triangles, squares, or hexagons, as these three polygon shapes are the only three that can tessellate (repeating the same shape over and over again, edge to edge, to cover an area without gaps or overlaps) to create an evenly spaced grid.

Rectangular binning is the simplest binning method and as such it heavily used. However, there are some reasons why rectangular bins are less preferable over hexagonal bins. Before we cover this, let’s have a look at hexagonal bins.

5.3.2.3 Hexagonal binning

In many applications binning is done using a technique called hexagonal binning. This technique uses hexagon shapes to create a grid of points and develops a spatial histogram that shows different data points as a range or group of pairs with common distances and directions. In hexagonal binning the number of points falling within a particular rectangular or hexagon in a gridded surface is what makes the different colors to easily visualize data (Smith, 2012). Hexagonnal binning was first developed in 1987 and today “hexbinning” is conducted by laying a hexagonal grid on top of 2-dimensional data (Johnson, 2011). Once this is done users can conduct data point counts to determine the number of points for each hexagon (Johnson, 2011). The bins are then symbolized differently to show meaningful patterns in the data.

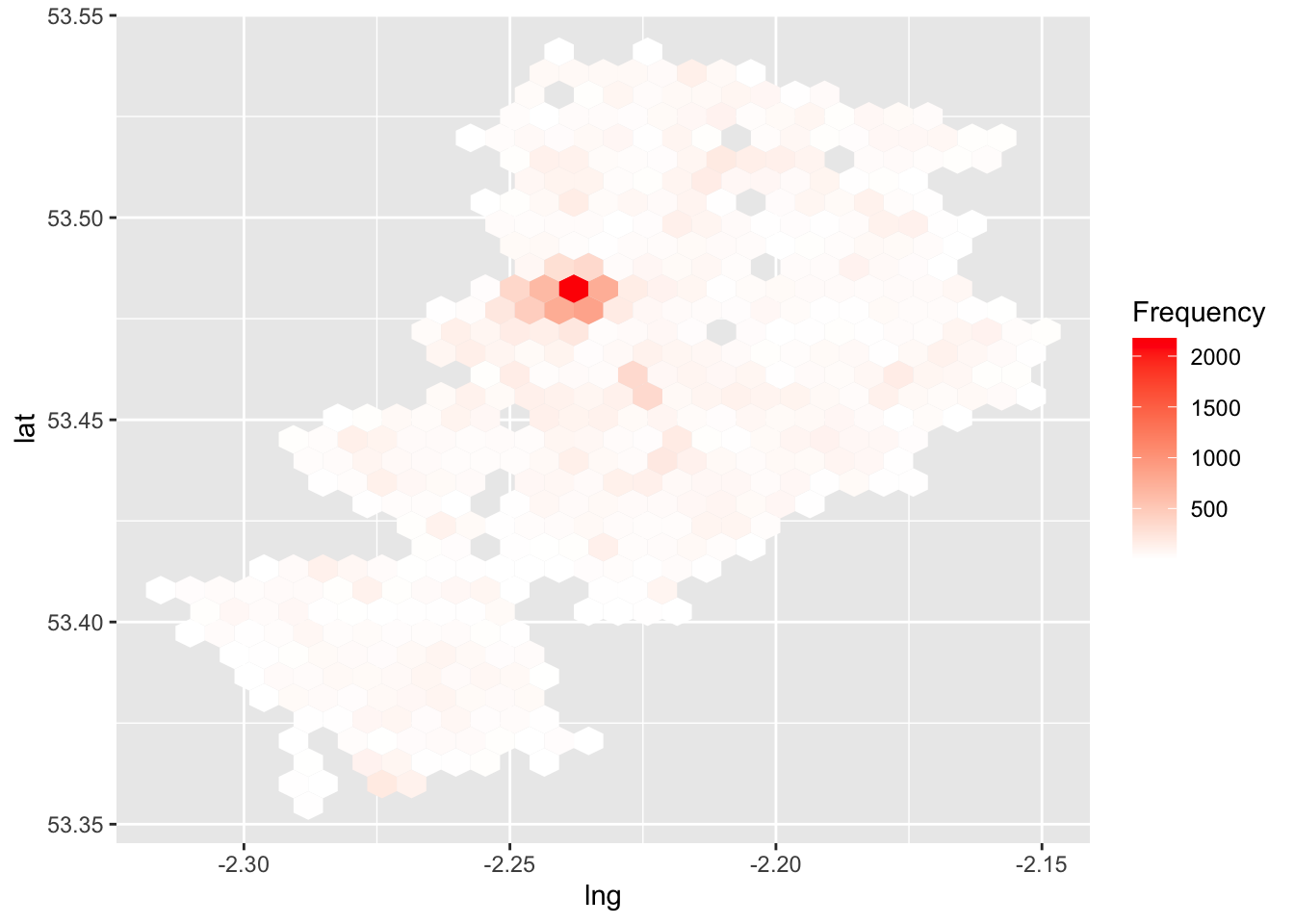

5.3.3 Activity 7: Hexbinning

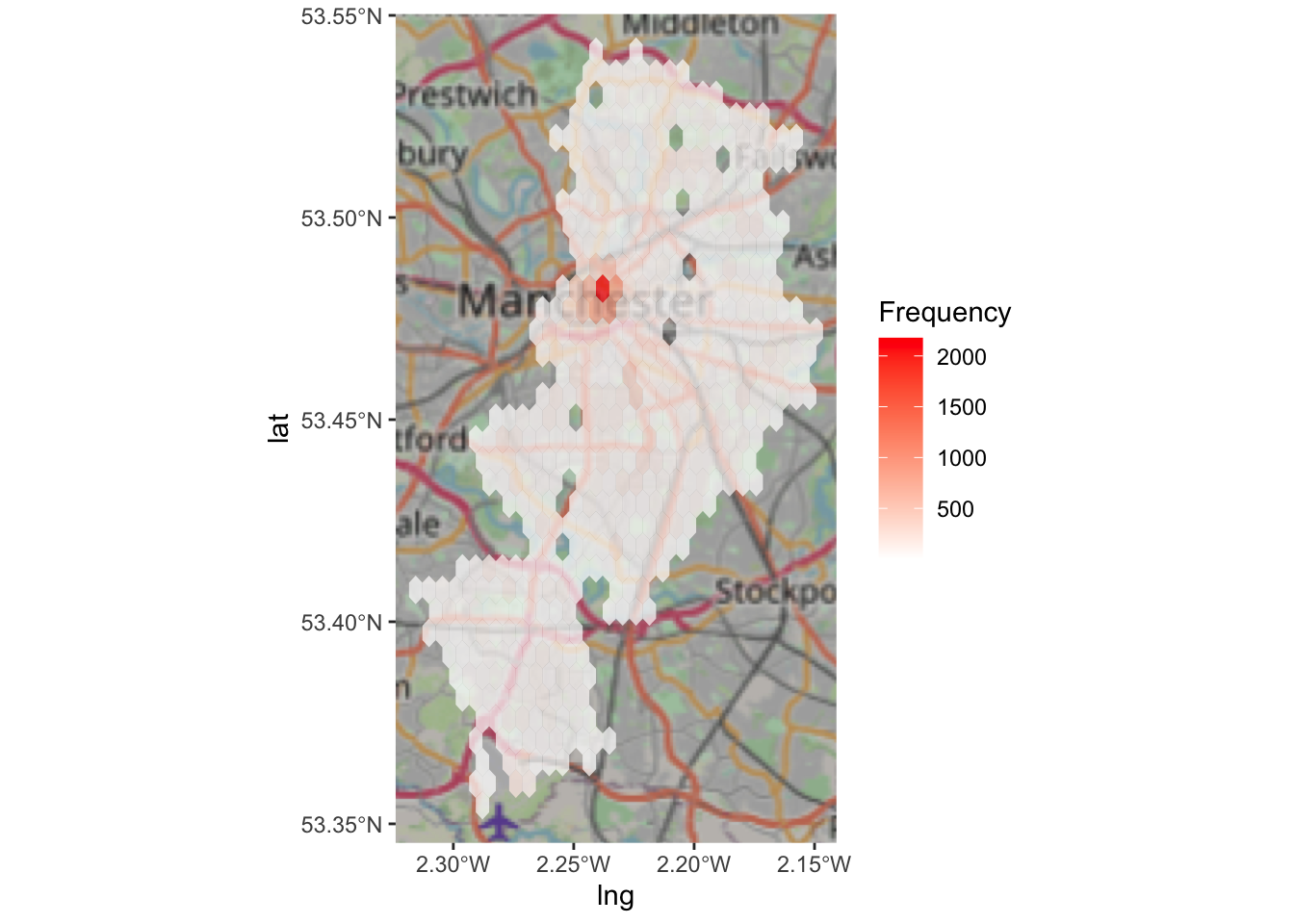

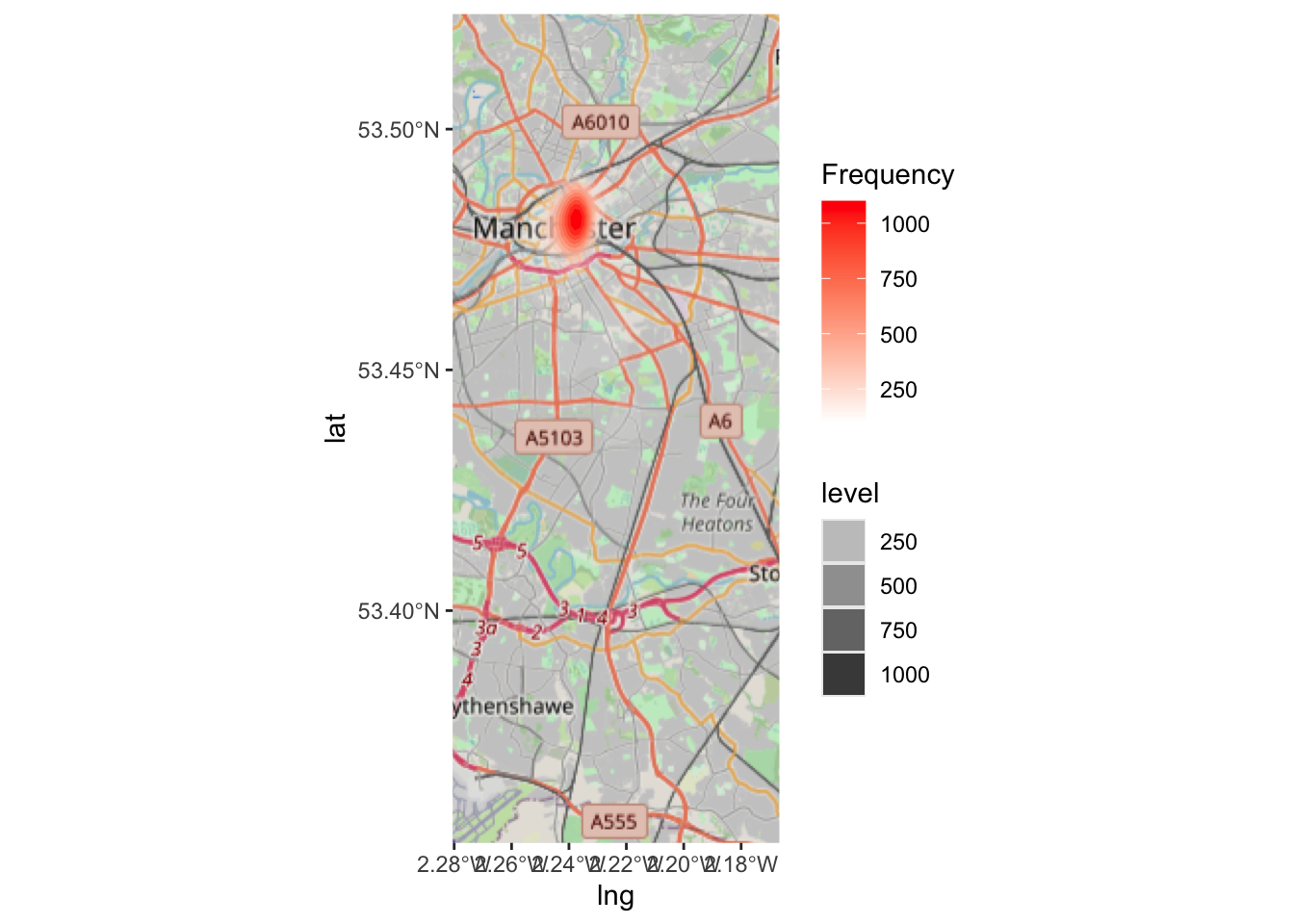

So how can we use hexbinning to solve our antisocial behaviour days of action task? Well let’s say we split Manchester city centre into hexagons, and count the number of antisocial behaviour instances in these. We can then identify the top hexagons, and locate our booths somewhere within these.

Also let’s get some data. You could go and get this data yourself from police.uk, we’ve been through all the steps for downloading data from there a few times now. But for now, I have a tidied set of data ready for you. This data is one year’s worth of antisocial behaviour from the police.uk data, from May 2016 to May 2017, for the borough of Manchester.

We can take our GMP crimes data, and select only the cases from ASB using the crime.type variable. If you want, however, I have already done this, so you can also download from my dropbox using the link here, (or get it from Blackboard):

## New names:

## Rows: 32162 Columns: 15

## ── Column specification

## ─────────────── Delimiter: "," chr

## (8): Month, Reported.by, Falls.within,

## Location, LSOA.code, LSOA.name, C...

## dbl (4): ...1, X, Longitude, Latitude

## lgl (3): Crime.ID,

## Last.outcome.category, Context

## ℹ Use `spec()` to retrieve the full

## column specification for this data. ℹ

## Specify the column types or set

## `show_col_types = FALSE` to quiet this

## message.

## • `` -> `...1`This is currently just a text dataframe, so we need to let R know that actually this is a spatial object, who’s geometry can be find in its longitude and latitude coordinates. As we have long/lat we can assure it’s in WGS 84 projection.

Now one thing that this does is it consumes our lng and lat columns into a geometry attribute. This is generally OK, but for the binning we will do, we would like to have them as separate coordinates. We can do this by adding the argument remove = FALSE to our st_as_sf function. We will also rename these coordinate columns to be lng and lat.

#extract the coords to some columns, "lng" and "lat"

sf_mcr_asb<- st_as_sf(mcr_asb, coords = c("Longitude", "Latitude"),

crs = 4326, agr = "constant", remove = FALSE) %>%

rename(lng = Longitude,

lat = Latitude)As a first step, we can plot asb in the borough of Manchester using simple ggplot! Remember the data visualisation session from weeks ago? We discussed how ggplot is such a great tool for building visualisations, because you can apply whatever geometry best suits your data. So for us to just have a look at the hexbinned version of our point data of antisocial behaviour, we can use the stat_binhex() function. We can also recreate the thematic map element, as we can use the frequency of points in each hex to shade each hexbin from white (least number of incidents) to red (most nuber of incidents).

So let’s have a go:

ggplot(sf_mcr_asb, aes(lng, lat)) + #define data and variables for x and y axes

stat_binhex() + #add binhex layer (hexbin)

scale_fill_gradientn(colours = c("white","red"), name = "Frequency") #add shading based on number of ASB incidents

Neat, but doesn’t quite tell us where that really dark hexbon actually is. So it would be much better if we could do this with a basemap as the background, rather than our grey ggplot theme.

Now, we can apply the same code as we used above, for the ggplot, to this ggmap, to add our hexbins on top of this basemap:

ggplot(sf_mcr_asb, aes(x = lng, y = lat)) +

annotation_map_tile() +

stat_binhex(alpha=0.7) + #add binhex layer (hexbin)

scale_fill_gradientn(colours = c("white","red"), name = "Frequency") #add shading based on number of ASB incidents## Zoom: 10

Now this should give you some more context! Woo!

So I mentioned we’d go over some reasons why you should consider aggregating into a hexagon grid rather than other shape:

- Hexagons reduce sampling bias due to edge effects of the grid shape. The edge effects of bounded space refers to the problem of truncated data that can skew the results of subsequent analyses (we’ll get to this in the next section). This is related to the low perimeter-to-area ratio of the shape of the hexagon. A circle has the lowest ratio but cannot tessellate to form a continuous grid. Hexagons are the most circular-shaped polygon that can tessellate to form an evenly spaced grid.

- This circularity of a hexagon grid allows it to represent curves in the patterns of your data more naturally than square grids.

- When comparing polygons with equal areas, the more similar to a circle the polygon is, the closer to the centroid the points near the border are (especially points near the vertices). This means that any point inside a hexagon is closer to the centroid of the hexagon than any given point in an equal-area square or triangle would be (this is due to the more acute angles of the square and triangle versus the hexagon).

- Hexagons are preferable when your analysis includes aspects of connectivity or movement paths. Due to the linear nature of rectangles, fishnet grids can draw our eyes to the straight, unbroken, parallel lines which may inhibit the underlying patterns in the data. Hexagons tend to break up the lines and allow any curvature of the patterns in the data to be seen more clearly and easily. This breakup of artificial linear patterns also diminishes any orientation bias that can be perceived in fishnet grids.

- If you are working over a large area, a hexagon grid will suffer less distortion due to the curvature of the earth than the shape of a fishnet grid.

- Finding neighbors is more straightforward with a hexagon grid. Since the edge or length of contact is the same on each side, the centroid of each neighbor is equidistant. However, with a fishnet grid, the Queen’s Case (above/below/right/left) neighbor’s centroids are N units away, while the centroids of the diagonal (Rook) neighbors are farther away (exactly the square root of 2 times N units away).

- Since the distance between centroids is the same in all six directions with hexagons, if you are using a distance band to find neighbors or are using the Optimized Hot Spot Analysis, Optimized Outlier Analysis or Create Space Time Cube By Aggregating Points tools, you will have more neighbors included in the calculations for each feature if you are using hexagonal grid as opposed to a fishnet grid.

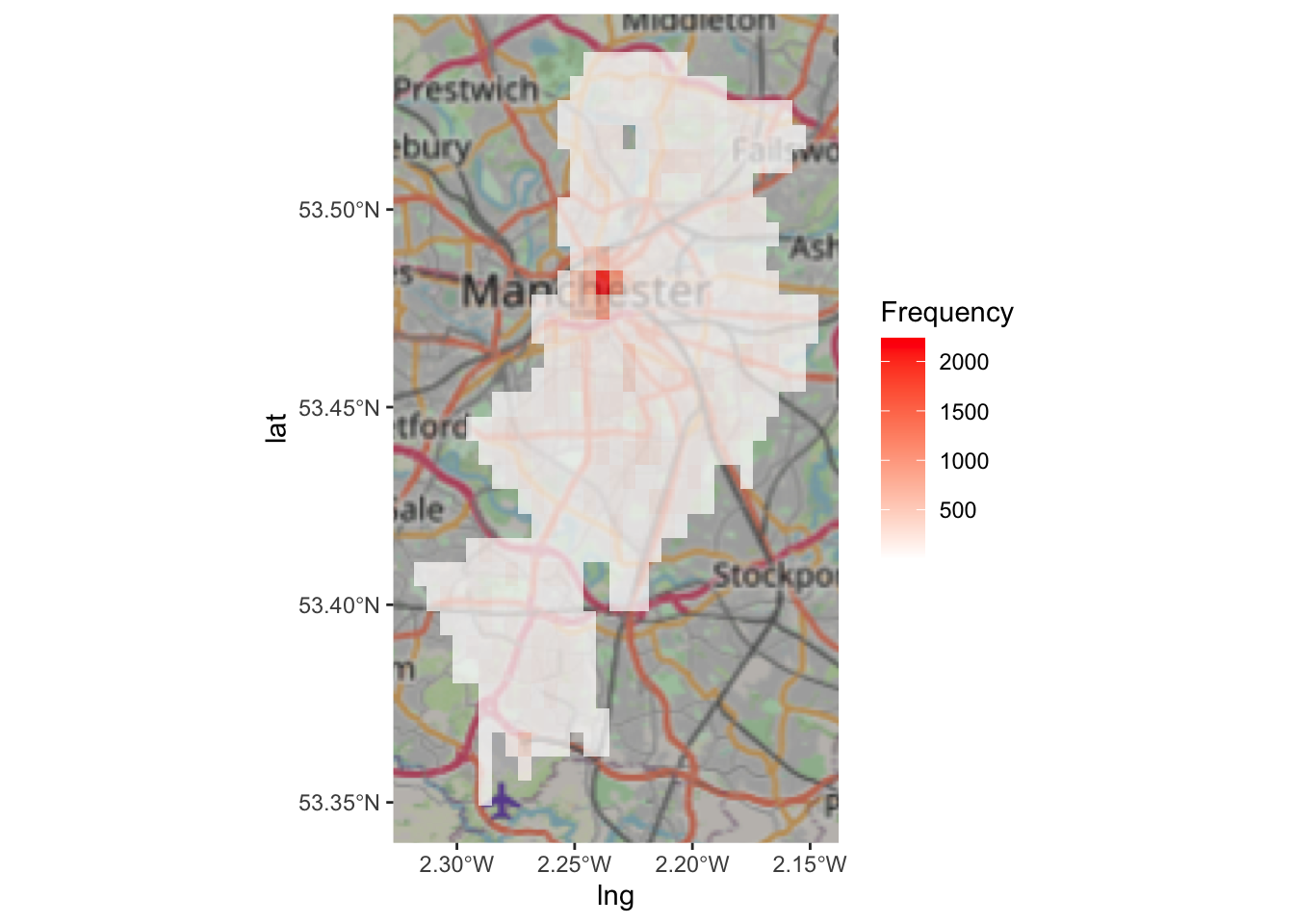

5.3.4 Activity 8: Rectangular binning

Now, to again illustrate the differences of different approaches, let’s see what this map would look like with:

- rectangular binning:

ggplot(sf_mcr_asb, aes(x = lng, y = lat)) +

annotation_map_tile() +

stat_bin2d(alpha=0.7) +

scale_fill_gradientn(colours = c("white","red"),

name = "Frequency") ## Zoom: 10

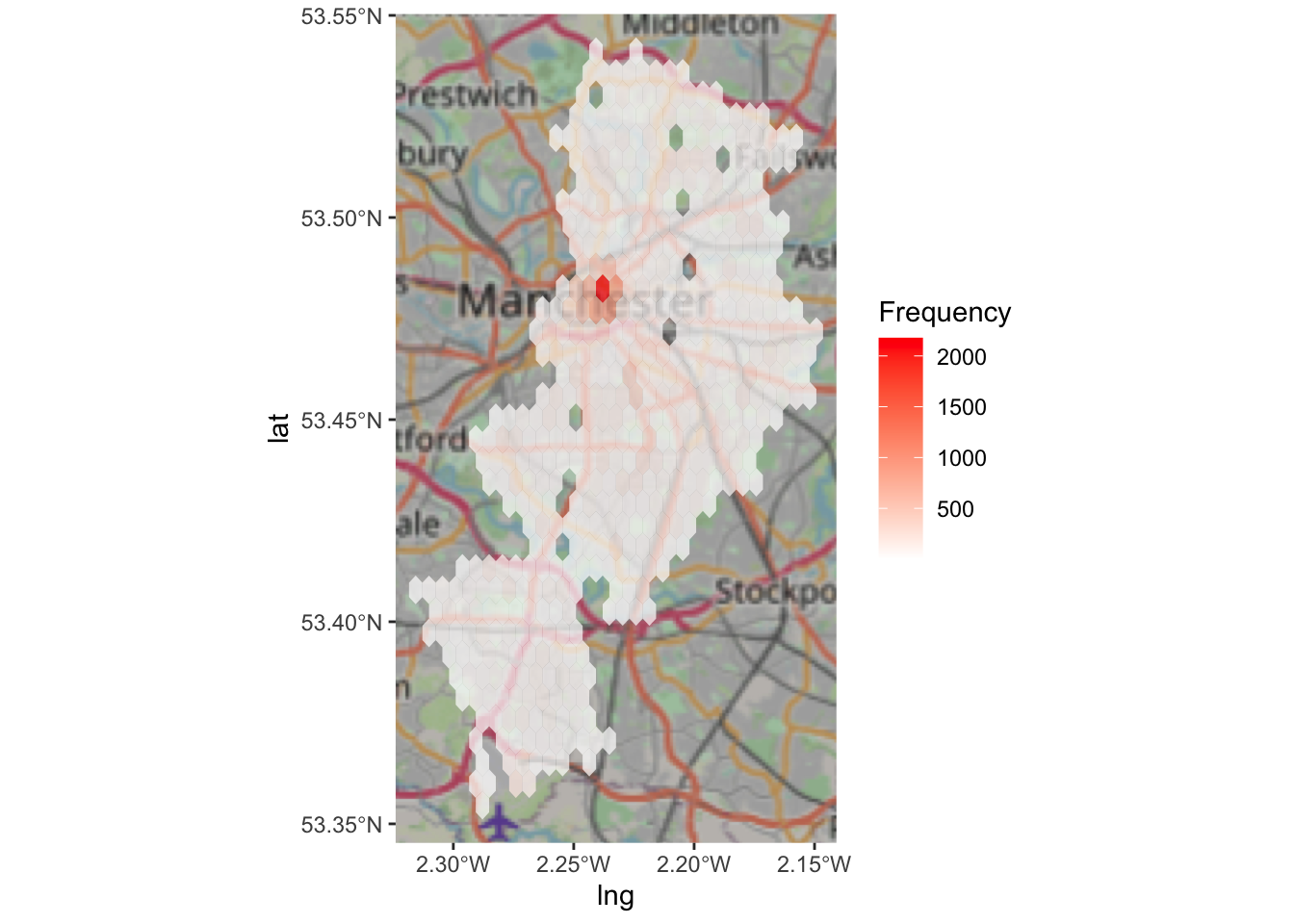

- hexagonal binning:

ggplot(sf_mcr_asb, aes(x = lng, y = lat)) +

annotation_map_tile() +

stat_binhex(alpha=0.7) +

scale_fill_gradientn(colours = c("white","red"),

name = "Frequency")## Zoom: 10

- a simple “heatmap” (we will discuss these more thoroughly next week):

ggplot(sf_mcr_asb, aes(x = lng, y = lat)) +

annotation_map_tile() +

stat_density2d(aes(fill = after_stat(level), # value corresponding to discretized density estimates

alpha = after_stat(level)),

geom = "polygon") + # creates the bands of different colors

## Configure the colors, transparency and panel

scale_fill_gradientn(colours = c("white","red"),

name = "Frequency") +

scale_alpha(guide = "none")## Zoom: 11

Look at the difference between the three maps (hex, rectangle, and density). How would your conclusions change if you were given these maps? Would you make different decisions about where to place your booths for the days of action? Why or why not? Discuss.

5.3.5 Multivariate binning

Multivariate binning is another binning method that lets you visualise slightly more complex data. In this method there can be many different variables consisting of different types of data. Like other binning methods the data is typically grouped with the sum or average of the data. Different types of symbology (such as size, shape and color) can also be used to represent this data as well.

We won’t be covering this here but just so you can have a look at some examples here.

5.3.6 Benefits of Binning

Because of the plethora of data types available and the wide variety of projects being done in GIS, binning is a popular method for mapping complex data and making it meaningful. Binning is a good option for map makers as well as users because it makes data easy to understand and it can be both static and interactive on many different map scales. If every different point were shown on a map it would have to be a very large scale map to ensure that the data points did not overlap and were easily understood by people using the maps.

According to Kenneth Field, an Esri Research Cartographer, “Data binning is a great alternative for mapping large point-based data sets which allows us to tell a better story without interpolation. Binning is a way of converting point-based data into a regular grid of polygons so that each polygon represents the aggregation of points that fall within it.”

By using binning to create categories of data maps are easier to understand, more accurate and more visually appealing.

Hexbin plots can be viewed as an alternative to scatter plots. The hexagon-shaped bins were introduced to plot densely packed sunflower plots. They can be used to plot scatter plots with high-density data.

5.4 A note of caution: MAUP

Now that we’ve shown you how to do a lot of spatial crime analysis, we wanted to close with some words of caution. Remember that everything you’ve learned here are just tools that you will be applying to data you are working with, but it’s up to you, the researcher, the analyst, the domain expert, to apply and use these with careful consideration and cautions. This discussion is very much part of spatial crime analysis, and an important field of thought.

I borrow here from George Renghert and Brian Lockwood:

When spatial analysis of crime is conducted, the analyst should not ignore the spatial units that data are aggregated into and the impact of this choice on the interpretation of findings. Just as several independent variables are considered to determine whether they have statistical significance, a consideration of multiple spatial units of analysis should be made as well, in order to determine whether the choice of aggregation level used in a spatial analysis can result in biased findings.

In particular, they highlight four main issues inherent in most studies of space:

- issues associated with politically bounded units of aggregation,

- edge effects of bounded space

- the modifiable aerial unit problem (MAUP)

- and ways in which the results of statistical analyses can be manipulated by changes in the level of aggregation.

In this lab we will focus on MAUP, but if you are interested in this kind of work, you should definitely read their paper to consider the other issues as well. There are techniques that can be used to alleviate each of the methodological difficulties, and they are described in accessible detail in their paper: Rengert, George F., and Brian Lockwood. “Geographical units of analysis and the analysis of crime.” Putting crime in its place. Springer, New York, NY, 2009. 109-122.

5.4.1 What is MAUP?

The Modifiable Areal Unit Problem (MAUP) is an important issue for those who conduct spatial analysis using units of analysis at aggregations higher than incident level. It is one of the better-known problems in geography and spatial analysis. This phenomenon illustrates both the need for considering space in one’s analysis, and the fundamental uncertainties that accompany real-world analysis.

The MAUP is “a problem arising from the imposition of artificial units of spatial reporting on continuous geographical phenomena, leading to artifacts or errors are created when one groups data into units for analysis.

The classic text on MAUP is the 1983 paper Openshaw, Stan. “The modifiable areal unit problem. CATMOG (Concepts and techniques in modern geography) 38.” Geo Abstracts, Norwich. 1984..

There are two distinct types of MAUP: Scale (i.e. determining the appropriate size of units for aggregation) and zone (i.e. drawing boundaries or grouping).

5.4.1.1 Scale

The scale problem involves results that change based on data that are analyzed at higher or lower levels of aggregation (Changing the number of units). For example, evaluating data at the state level vs. Census tract level.

The scale problem has moved to the forefront of geographical criminology as a result of the recent interest in small-scale geographical units of analysis. It has been suggested that smaller is better since small areas can be directly perceived by individuals and are likely to be more homogenous than larger areas. - Gerell, Manne. “Smallest is better? The spatial distribution of arson and the modifiable areal unit problem.” Journal of quantitative criminology 33.2 (2017): 293-318.

5.4.1.2 Zone

The zonal problem involves keeping the same scale of research (say, at the state level) but changing the actual shape and size of those areas.

The basic issue with the MAUP is that aggregate units of analysis are often arbitrarily produced by whom ever is in charge of creating the aggregate units. A classic example of this problem is known as Gerrymandering. Gerrymandering involves shaping and re-shaping voting districts based on the political affiliations of the resident citizenry.

The inherent problem with the MAUP and with situations such as Gerrymandering is that units of analysis are not based on geographic principles, and instead are based on political and social biases. For researchers and practitioners the MAUP has very important implications for research findings because it is possible that as arbitrarily defined units of analysis change shape findings based on these units will change as well.

When spatial data are derived from counting or averaging data within areal units, the form of those areal units affects the data recorded, and any statistical measures derived from the data. Modifying the areal units therefore changes the data. Two effects are involved: a zoning effect arising from the particular choice of areas at a given scale; and an aggregation effect arising from the extent to which data are aggregated over smaller or larger areas. The modifiable areal unit problem arises in part from edge effect.

If you’re interested, in particular about politics and voting, you can read this interesting piece to learn more about gerrymandering

5.4.2 Why does MAUP matter?

The practical implications of MAUP are immense for almost all decision-making processes involving GIS technology, since with the availability of aggregated maps, policy could easily focus on issues and problems which might look different if the aggregation scheme used were changed .

All studies based on geographical areas are susceptible to MAUP. The implications of the MAUP affect potentially any area level data, whether direct measures or complex model-based estimates. Here are a few examples of situations where the MAUP is expected to make a difference:

- The special case of the ecological fallacy is always present when Census area data are used to formulate and evaluate policies that address problems at individual level, such as deprivation. Also, it is recognised that a potential source of error in the analysis of Census data is ‘the arrangement of continuous space into defined regions for purposes of data reporting’

- The MAUP has an impact on indices derived from areal data, such as measures of segregation, which can change significantly as a result of using different geographical levels of analysis to derive composite measures .

- The choice of boundaries for reporting mortality ratios is not without consequences: when the areas are too small, the values estimated are unstable, while when the areas are too large, the values reported may be over-smoothed, i.e. meaningful variation may be lost .

- Gerell, Manne. “Smallest is better? The spatial distribution of arson and the modifiable areal unit problem.” Journal of quantitative criminology 33.2 (2017): 293-318.

5.4.3 What can we do?

Most often you will just have to remain aware of the MAUP and it’s possible effects. There are some techniques, that can help you address these issues, and the chapter pointed out at the beginning of this section is a great place to start to explore these. It is possible to use also an alternative, zone-free approach to mapping these crime patterns, perhaps by using kernel density estimation. Here we model the relative density of the points as a density surface - essentially a function of location (x,y) representing the relative likelihood of occurrence of an event at that point. We have covered KDE elsewhere in this course.

For the purposes of this course, it’s enough that you know of, and understand the MAUP and its implications. Always be smart when choosing your appropriate spatial unit of analysis, and when you use binning of any form, make sure you consider how and if your conclusions might change compared to another possible approach.

5.5 Transforming polygons

When you have meaningful spatial units of analysis in your polygons, for example you are interested specifically in Local Authorities, it might make sense to stick with what we did last week, and aggregate the points into these polygons to create thematic maps. However, while thematic maps are an accessible and visually appealing method for displaying spatial information, they can also be highly misleading. Irregularly shaped polygons and large differences in the size of areas being mapped can introduce misrepresentation. The message researchers want to get across might be lost, or even worse, misdirect the viewers to erroneous conclusions. This article provides a helpful discussion of the problem illustrating the case with UK election maps. It is worth reading.

Fortunately, there are many methods in R to enhance the legibility of geographic information and the interpretability of what it is trying to be communicated.

Broadly, the options are:

- cartogram

- hexmap

- grid

Selecting the appropriate method might depend on the research question being posed (e.g. clustering) and the data itself. Even once a method has been selected, there are different ways of operationalising them.

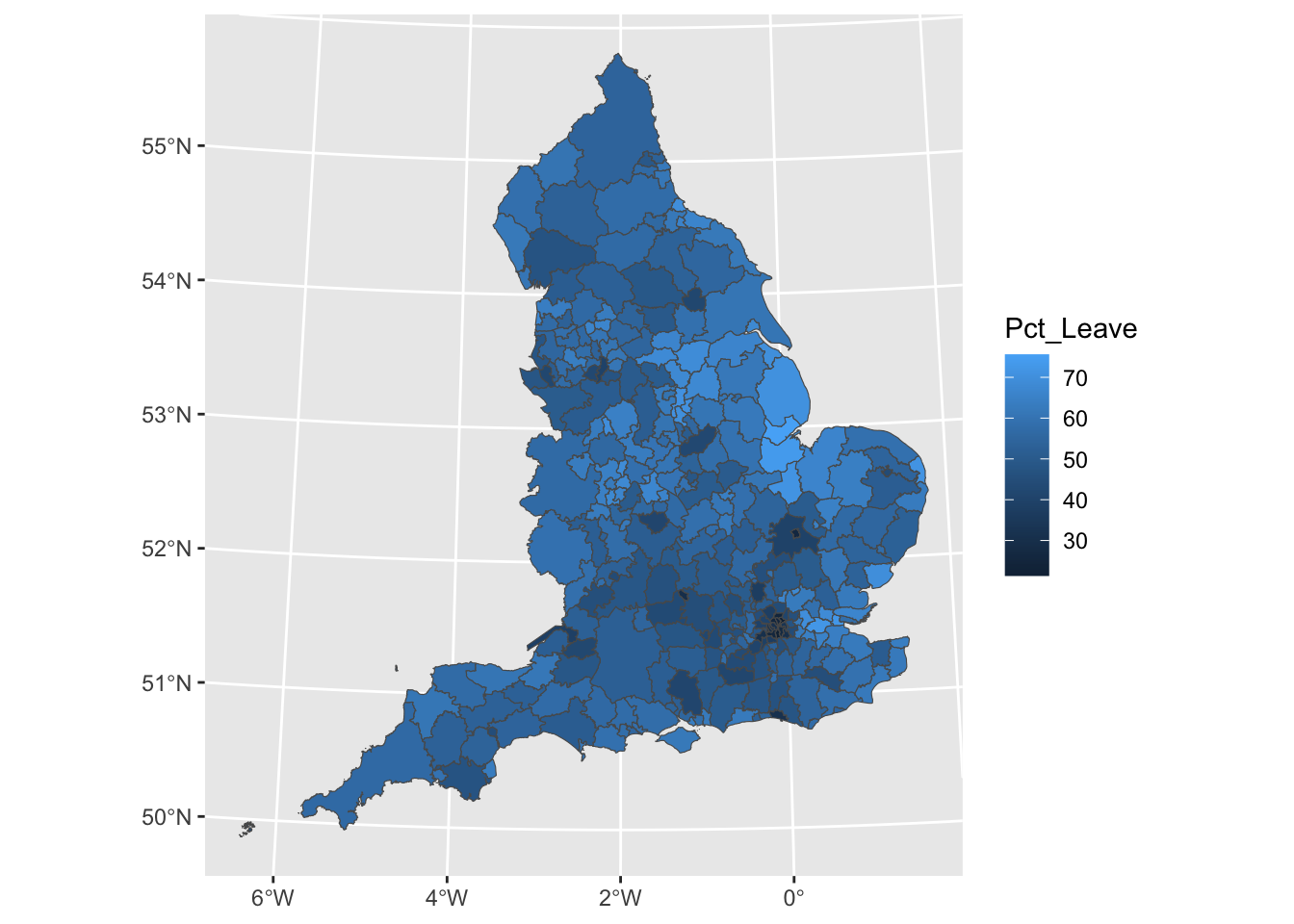

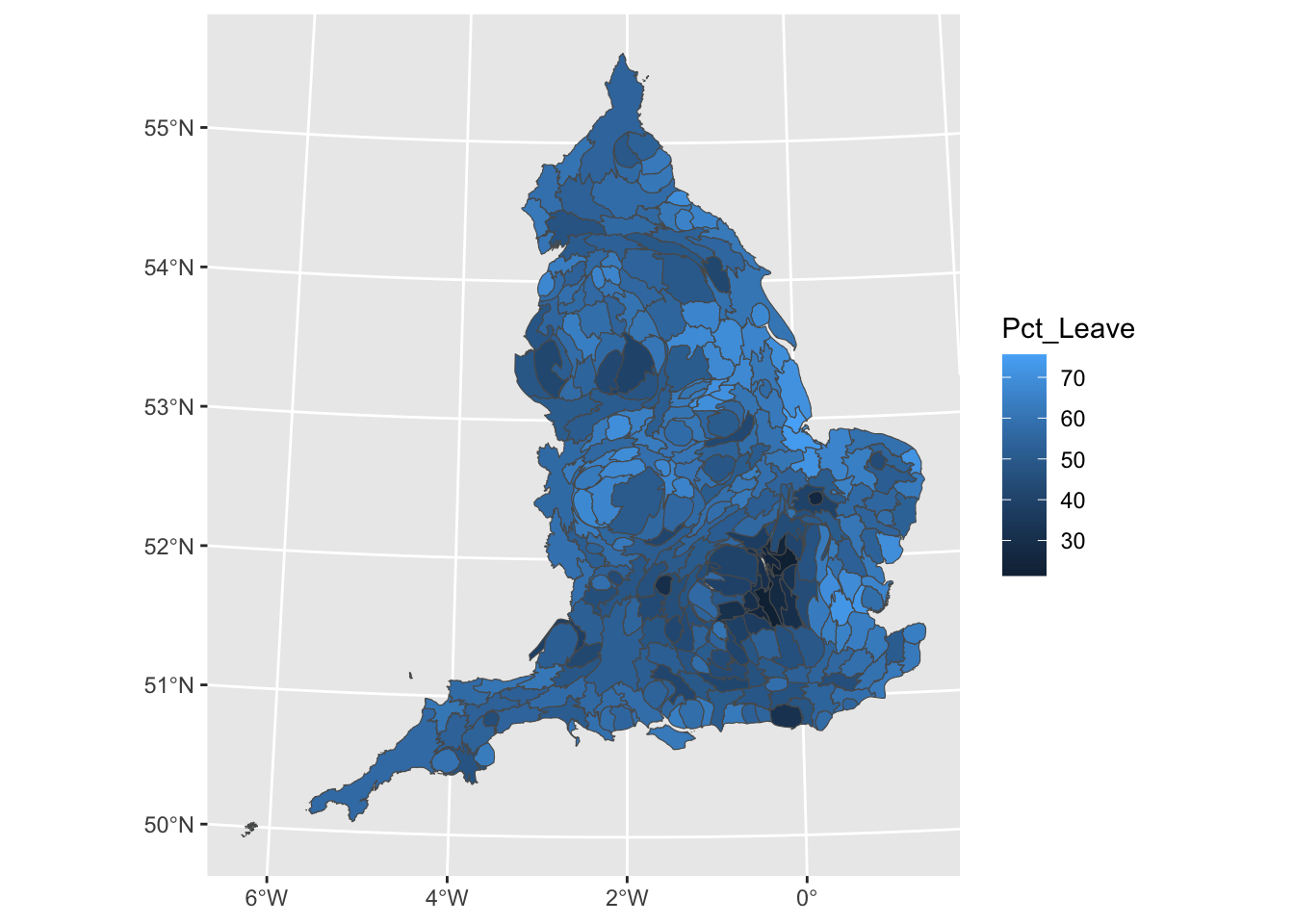

Let’s explore this using the example of the results of the 2016 EU referendum at Local Authority level, where remain areas clustered in London. A simple thematic map does not necessarily communicate this well because Local Authorities are both small and densely populated in London.

You can download the full set of EU referendum result data as a csv from the Electoral Commission webside. Let’s read it straight into R:

eu_ref <- read_csv("https://www.electoralcommission.org.uk/sites/default/files/2019-07/EU-referendum-result-data.csv")## Rows: 382 Columns: 21

## ── Column specification ───────────────

## Delimiter: ","

## chr (4): Region_Code, Region, Area_Code, Area

## dbl (17): id, Electorate, ExpectedBallots, VerifiedBallotPapers, Pct_Turnout...

##

## ℹ Use `spec()` to retrieve the full column specification for this data.

## ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.OKAY, now we need a shapefile to join it to. Remember when we got the Manchester lsoa shapefile with the boundary selector? Let’s go back, and this time get Local Authority Districts for England.

In this case that means select “English Districts, UAs and London Boroughs, 2011”:

Once you have the file, download, extract (unzip) and put the folder in your working directory. Mine is in a subfolder in my working directory called data, so I point R inside that folder to find my shape file.

## Reading layer `england_lad_2011_gen' from data source

## `/Users/user/Desktop/resquant/crime_mapping_textbook/data/England_lad_2011_gen/england_lad_2011_gen.shp'

## using driver `ESRI Shapefile'

## Simple feature collection with 326 features and 4 fields

## Geometry type: MULTIPOLYGON

## Dimension: XY

## Bounding box: xmin: 82644.8 ymin: 5349.399 xmax: 655976.9 ymax: 657599.5

## Projected CRS: OSGB36 / British National GridWe can now join the EU referendum data, as we have learned in the past weeks:

Now we can have a look at these data:

We can see that in smaller LAs we don’t even really see the result, as the boundary lines pretty much cover everything. Hmm. Now what we can do is transform the shapes.

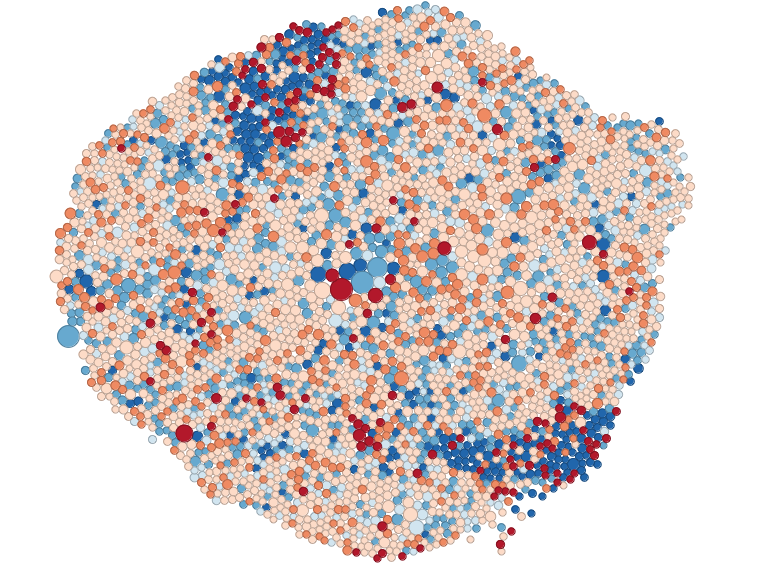

5.5.1 Activity 9: Cartograms

The last thing we will do today is make some cartograms! Cartogram types of maps distort reality to convey information. They resize and exaggerate any variable on an attribute value.

There are different types of cartograms.

Density-equalizing (contiguous) cartograms are your traditional cartograms. In density-equalizing cartograms, map features bulge out a specific variable. Even though it distorts each feature, it remains connected during its creation. On the other hand, you can have Non-Contiguous Cartograms, where features in non-contiguous cartograms don’t have to stay connected. Finally, Dorling Cartogram (named after professor Danny Dorling) uses shapes like circles and rectangles to depict area. These types of cartograms make it easy to recognize patterns!

Now we can make our own as well, using the cartogram package.

Within that there is the cartogram_cont() function, which takes 2 arguments, 1 - the shape file (it can be a SpatialPolygonDataFrame or an sf object), and 2 - the variable which it should use to distort the polygon by.

In our data set we have a variable “electorate” which refers to the total number of registered electors, which we will use to distort the polygons:

# construct a cartogram using the size of the electorate in each LA

eu_cartogram <- cartogram_cont(eu_sf, "Electorate")Again this is some labour intensive work, much like the grid making, you have some time to chill now. Maybe read up on the maths behind this tranformation as well, in the paper Dougenik, J. A., Chrisman, N. R., & Niemeyer, D. R. (1985). An Algorithm To Construct Continuous Area Cartograms. In The Professional Geographer, 37(1), 75-81..

I do have a tip for you if you want to make sure the process does not take too long. You can set a parameter in the cartogram function which is the “itermax” parameter. This specifies the maximum number of iterations we are happy with. If you don’t specify it’s set to 15. Let’s set to 5 for the sake of speed:

# construct a cartogram using the size of the electorate in each LA

eu_cartogram <- cartogram_cont(eu_sf, "Electorate", itermax = 5)And if your cartogram has been created, you can now plot again the referendum results, but using the electorate to change the size of the local authority:

# plot the percentage voting leave onto our cartogram

ggplot() +

geom_sf(data = eu_cartogram, aes(fill = Pct_Leave))

We can now see London much better, and see that darker coloured cluster where much smaller percentage of people voted leave.

Okay that’s probably enough for the day. Nice work crime mappers!

5.6 References and further reading

5.6.1 Binning

- Johnson, Zachary Forest. (18 October 2011). “Hexbins!” Retrieved from: http://indiemaps.com/blog/2011/10/hexbins/ (8 August 2014).

- Smith, Nate. (25 May 2012). “Binning: An Alternative to Point Maps.” Mapbox. Retrieved from: https://www.mapbox.com/blog/binning-alternative-point-maps/ (8 August 2014).

- Claudia A Engel’s fantastic R-pub on Making Maps in R.

- Hexbin Graph Gallery

- US Drought Hexmap

- Hexbin with ggplot2

5.6.2 MAUP

- Gerell, Manne. “Smallest is better? The spatial distribution of arson and the modifiable areal unit problem.” Journal of quantitative criminology 33.2 (2017): 293-318.

- Openshaw, Stan. “The modifiable areal unit problem. CATMOG (Concepts and techniques in modern geography) 38.” Geo Abstracts, Norwich. 1984..

- Rengert, George F., and Brian Lockwood. “Geographical units of analysis and the analysis of crime.” Putting crime in its place. Springer, New York, NY, 2009. 109-122.

5.6.3 Transforming polygons

- Waldo Tobler (2004) Thirty Five Years of Computer Cartograms, Annals of the Association of American Geographers, 94:1, 58-73, DOI: 10.1111/j.1467-8306.2004.09401004.x

- Langton, S.H. & Solymosi, R. (2018) ‘Visualising geographic information: examining methods of improving the thematic map.’ RPubs. Available: https://rpubs.com/langton_/visual_geography_study